AGI, generativeAI, Grok, DeepSeek & Expert Models in Healthcare

Updated on 9/9/2025

Conversation

Updated on 7/15/2025

AlphaGenome Unpacked: Promise, Progress, and What Comes Next for AI in Genomics

Click above for registration

Join us for a deep dive into DeepMind’s newest foundation model for regulatory genomics – and where it goes next. AlphaGenome is DeepMind’s boldest step yet in decoding the regulatory genome. A single deep learning model that predicts thousands of functional genomic outputs – from gene expression and splicing to 3D genome structure – based on just 1 Mb of DNA sequence. It’s already setting new benchmarks:

- Outperforms tools like Borzoi, ChromBPNet, Orca, and SpliceAI

- Achieves base-pair resolution across 11 modalities and 5,930 human tracks

- Predicts variant effects across all modalities in <1 second on a single GPU

But what does that mean for biomedical researchers? And what’s still missing?

We’ll break down where AlphaGenome excels, where gaps remain, and how tools like curated knowledge graphs can provide structured biological context.

From Google DeepMind

Source: https://deepmind.google/discover/blog/alphagenome-ai-for-better-understanding-the-genome/

AlphaGenome: AI for better understanding the genome

Introducing a new, unifying DNA sequence model that advances regulatory variant-effect prediction and promises to shed new light on genome function — now available via API.

The genome is our cellular instruction manual. It’s the complete set of DNA which guides nearly every part of a living organism, from appearance and function to growth and reproduction. Small variations in a genome’s DNA sequence can alter an organism’s response to its environment or its susceptibility to disease. But deciphering how the genome’s instructions are read at the molecular level — and what happens when a small DNA variation occurs — is still one of biology’s greatest mysteries.

Today, we introduce AlphaGenome, a new artificial intelligence (AI) tool that more comprehensively and accurately predicts how single variants or mutations in human DNA sequences impact a wide range of biological processes regulating genes. This was enabled, among other factors, by technical advances allowing the model to process long DNA sequences and output high-resolution predictions.

To advance scientific research, we’re making AlphaGenome available in preview via our AlphaGenome API for non-commercial research, and planning to release the model in the future.

We believe AlphaGenome can be a valuable resource for the scientific community, helping scientists better understand genome function, disease biology, and ultimately, drive new biological discoveries and the development of new treatments.

How AlphaGenome works

Our AlphaGenome model takes a long DNA sequence as input — up to 1 million letters, also known as base-pairs — and predicts thousands of molecular properties characterising its regulatory activity. It can also score the effects of genetic variants or mutations by comparing predictions of mutated sequences with unmutated ones.

Predicted properties include where genes start and where they end in different cell types and tissues, where they get spliced, the amount of RNA being produced, and also which DNA bases are accessible, close to one another, or bound by certain proteins. Training data was sourced from large public consortia including ENCODE, GTEx, 4D Nucleome and FANTOM5, which experimentally measured these properties covering important modalities of gene regulation across hundreds of human and mouse cell types and tissues.

The AlphaGenome architecture uses convolutional layers to initially detect short patterns in the genome sequence, transformers to communicate information across all positions in the sequence, and a final series of layers to turn the detected patterns into predictions for different modalities. During training, this computation is distributed across multiple interconnected Tensor Processing Units (TPUs) for a single sequence.

This model builds on our previous genomics model, Enformer and is complementary to AlphaMissense, which specializes in categorizing the effects of variants within protein-coding regions. These regions cover 2% of the genome. The remaining 98%, called non-coding regions, are crucial for orchestrating gene activity and contain many variants linked to diseases. AlphaGenome offers a new perspective for interpreting these expansive sequences and the variants within them.

AlphaGenome’s distinctive features

AlphaGenome offers several distinctive features compared to existing DNA sequence models:

Long sequence-context at high resolution

Our model analyzes up to 1 million DNA letters and makes predictions at the resolution of individual letters. Long sequence context is important for covering regions regulating genes from far away and base-resolution is important for capturing fine-grained biological details.

Previous models had to trade off sequence length and resolution, which limited the range of modalities they could jointly model and accurately predict. Our technical advances address this limitation without significantly increasing the training resources — training a single AlphaGenome model (without distillation) took four hours and required half of the compute budget used to train our original Enformer model.

Comprehensive multimodal prediction

By unlocking high resolution prediction for long input sequences, AlphaGenome can predict the most diverse range of modalities. In doing so, AlphaGenome provides scientists with more comprehensive information about the complex steps of gene regulation.

Efficient variant scoring

In addition to predicting a diverse range of molecular properties, AlphaGenome can efficiently score the impact of a genetic variant on all of these properties in a second. It does this by contrasting predictions of mutated sequences with unmutated ones, and efficiently summarising that contrast using different approaches for different modalities.

Novel splice-junction modeling

Many rare genetic diseases, such as spinal muscular atrophy and some forms of cystic fibrosis, can be caused by errors in RNA splicing — a process where parts of the RNA molecule are removed, or “spliced out”, and the remaining ends rejoined. For the first time, AlphaGenome can explicitly model the location and expression level of these junctions directly from sequence, offering deeper insights about the consequences of genetic variants on RNA splicing.

State-of-the-art performance across benchmarks

AlphaGenome achieves state-of-the-art performance across a wide range of genomic prediction benchmarks, such as predicting which parts of the DNA molecule will be in close proximity, whether a genetic variant will increase or decrease expression of a gene, or whether it will change the gene’s splicing pattern.

Bar graph showing AlphaGenome’s relative improvements on selected DNA sequence and variant effect tasks, compared against results for the current best methods in each category.

When producing predictions for single DNA sequences, AlphaGenome outperformed the best external models on 22 out of 24 evaluations. And when predicting the regulatory effect of a variant, it matched or exceeded the top-performing external models on 24 out of 26 evaluations.

This comparison included models specialized for individual tasks. AlphaGenome was the only model that could jointly predict all of the assessed modalities, highlighting its generality. Read more in our preprint.

The benefits of a unifying model

AlphaGenome’s generality allows scientists to simultaneously explore a variant’s impact on a number of modalities with a single API call. This means that scientists can generate and test hypotheses more rapidly, without having to use multiple models to investigate different modalities.

Moreover AlphaGenome’s strong performance indicates it has learned a relatively general representation of DNA sequence in the context of gene regulation. This makes it a strong foundation for the wider community to build upon. Once the model is fully released, scientists will be able to adapt and fine-tune it on their own datasets to better tackle their unique research questions.

Finally, this approach provides a flexible and scalable architecture for the future. By extending the training data, AlphaGenome’s capabilities could be extended to yield better performance, cover more species, or include additional modalities to make the model even more comprehensive.

It’s a milestone for the field. For the first time, we have a single model that unifies long-range context, base-level precision and state-of-the-art performance across a whole spectrum of genomic tasks.

Dr. Caleb Lareau, Memorial Sloan Kettering Cancer Center

A powerful research tool

AlphaGenome’s predictive capabilities could help several research avenues:

- Disease understanding: By more accurately predicting genetic disruptions, AlphaGenome could help researchers pinpoint the potential causes of disease more precisely, and better interpret the functional impact of variants linked to certain traits, potentially uncovering new therapeutic targets. We think the model is especially suitable for studying rare variants with potentially large effects, such as those causing rare Mendelian disorders.

- Synthetic biology: Its predictions could be used to guide the design of synthetic DNA with specific regulatory function — for example, only activating a gene in nerve cells but not muscle cells.

- Fundamental research: It could accelerate our understanding of the genome by assisting in mapping its crucial functional elements and defining their roles, identifying the most essential DNA instructions for regulating a specific cell type’s function.

For example, we used AlphaGenome to investigate the potential mechanism of a cancer-associated mutation. In an existing study of patients with T-cell acute lymphoblastic leukemia (T-ALL), researchers observed mutations at particular locations in the genome. Using AlphaGenome, we predicted that the mutations would activate a nearby gene called TAL1 by introducing a MYB DNA binding motif, which replicated the known disease mechanism and highlighted AlphaGenome’s ability to link specific non-coding variants to disease genes.

AlphaGenome will be a powerful tool for the field. Determining the relevance of different non-coding variants can be extremely challenging, particularly to do at scale. This tool will provide a crucial piece of the puzzle, allowing us to make better connections to understand diseases like cancer.

Professor Marc Mansour, University College London

Current limitations

AlphaGenome marks a significant step forward, but it’s important to acknowledge its current limitations.

Like other sequence-based models, accurately capturing the influence of very distant regulatory elements, like those over 100,000 DNA letters away, is still an ongoing challenge. Another priority for future work is further increasing the model’s ability to capture cell- and tissue-specific patterns.

We haven’t designed or validated AlphaGenome for personal genome prediction, a known challenge for AI models. Instead, we focused more on characterising the performance on individual genetic variants. And while AlphaGenome can predict molecular outcomes, it doesn’t give the full picture of how genetic variations lead to complex traits or diseases. These often involve broader biological processes, like developmental and environmental factors, that are beyond the direct scope of our model.

We’re continuing to improve our models and gathering feedback to help us address these gaps.

Enabling the community to unlock AlphaGenome’s potential

AlphaGenome is now available for non-commercial use via our AlphaGenome API. Please note that our model’s predictions are intended only for research use and haven’t been designed or validated for direct clinical purposes.

Researchers worldwide are invited to get in touch with potential use-cases for AlphaGenome and to ask questions or share feedback through the community forum.

We hope AlphaGenome will be an important tool for better understanding the genome and we’re committed to working alongside external experts across academia, industry, and government organizations to ensure AlphaGenome benefits as many people as possible.

Together with the collective efforts of the wider scientific community, we hope it will deepen our understanding of the complex cellular processes encoded in the DNA sequence and the effects of variants, and drive exciting new discoveries in genomics and healthcare.

Updated on 7/13/2025

Release Grok 4 – Intelligent Internet

https://www.youtube.com/watch?v=XZBiexwnHrk

https://www.youtube.com/watch?v=camlUwrxsfs

xAI launches Grok 4, learning “The Bitter Lesson” in AI

Grok 4

https://youtu.be/KypnjJSKi4o?t=1183

Grok 4 – Cost per Task

https://youtu.be/KypnjJSKi4o?t=1258

Grok 4 | xAI

Grok 4

Grok 4 is the most intelligent model in the world. It includes native tool use and real-time search integration, and is available now to SuperGrok and Premium+ subscribers, as well as through the xAI API. We are also introducing a new SuperGrok Heavy tier with access to Grok 4 Heavy – the most powerful version of Grok 4.

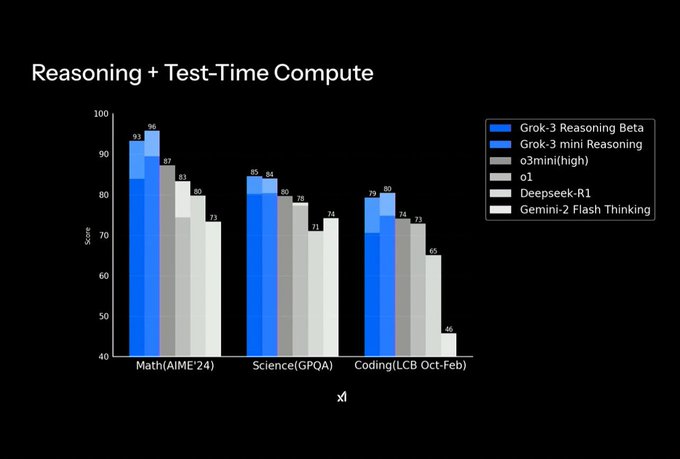

With Grok 3, we scaled next-token prediction pretraining to unprecedented levels, resulting in a model with unparalleled world knowledge and performance. We also introduced Grok 3 Reasoning, which was trained using reinforcement learning to think longer about problems and solve them with increased accuracy. During our work on Grok 3 Reasoning, we noticed scaling trends that suggested it would be possible to scale up our reinforcement learning training significantly.

For Grok 4, we utilized Colossus, our 200k GPU cluster, to run reinforcement learning to refine Grok’s reasoning abilities at a pretraining scale. This was made possible with innovations throughout the stack, from new infrastructure and algorithmic work, which increased the compute efficiency of our training by 6x, to a massive data collection effort, where we significantly expanded our verifiable training data from primarily math and coding data to many more domains. The resulting training run saw smooth performance gains while training on over an order of magnitude more compute than previously.

Humanity’s Last Exam

Deep expert-level benchmark at the frontier of human knowledge

State of the art

Full set (April 3, 2025) with Python and Internet tools

Performance over training

Text-only subset with Python and Internet tools

Grok 4 was trained with reinforcement learning to use tools. This allows Grok to augment its thinking with tools like a code interpreter and web browsing in situations which are usually challenging for large language models. When searching for real time information or asking difficult research questions, Grok 4 chooses its own search queries, finding knowledge from across the web and diving as deeply as it needs to craft a high quality response.

We also trained Grok to use powerful tools to find information from deep within X. Grok can use advanced keyword and semantic search tools and even view media to improve the quality of its answers.

I remember this popular post from a few days ago about this crazy word puzzle which had something to do with legs. Can you help me find it?

Investigating puzzle post

- The request is for a popular post from early July 2025 about a crazy word puzzle involving legs.

- Let’s try searching for relevant X posts from July 1 to July 9, 2025.

- Maybe searching for ‘viral word puzzle about legs’ could help find recent posts.

Searching for viral word puzzle about legs

𝕏@iquantified

𝕏@skeletonNaughty

𝕏@AZ_NYsles

+3 others

Searching for (word puzzle) legs min_faves:100 since:2025-07-01 until:2025-07-09 filter:has_engagement

𝕏@LegsEmporium

𝕏@chelsbrina13

- Posts from the search show a puzzle about words ending with homophones for leg parts like toe, calf, knee, shin.

- Many posts mention ‘toe, calf, knee, shin’ as the purple category in Connections puzzle #756.

Exploring puzzle details

- Posts around July 7-8, 2025, suggest the viral post is about the NYT Connections puzzle’s absurd category.

- Let’s search for ‘NYT Connections puzzle #756 answers’.

Searching for NYT Connections puzzle #756 answers

falsenytimes.com

falsemashable.com

falsecnet.com

+2 others

- For July 6, 2025, puzzle #756’s purple category was words ending with homophones of leg parts.

- The ‘crazy’ part is the stretch, like ‘shun’ sounding like ‘shin’ in some accents.

Searching for connections (toe calf knee shin) since:2025-07-06 until:2025-07-09 min_faves:100

Searching for nyt connections crazy leg puzzle homophones

𝕏@DreamWeaver5166

𝕏@iquantified

𝕏@VKlker

+3 others

- A post with 660 likes mentions ‘toe calf knee shin they’re homophones,’ which could be the popular one.

- Now, let’s search X for posts with ‘homophones’ and ‘parts of the leg’ from July 6 to 9, 2025, requiring at least 100 favorites.

Searching for homophones “parts of the leg” since:2025-07-06 until:2025-07-09 min_faves:100

Searching for connections legs OR leg since:2025-07-06 until:2025-07-09 min_faves:500

Analyzing search results

- A new search found a reply post with 5052 likes, quoting a meme about the puzzle’s absurdity.

- Another search revealed a post with 89123 likes, likely the viral one, quoting a complaint about the puzzle.

We have made further progress on parallel test-time compute, which allows Grok to consider multiple hypotheses at once. We call this model Grok 4 Heavy, and it sets a new standard for performance and reliability. Grok 4 Heavy saturates most academic benchmarks and is the first model to score 50% on Humanity’s Last Exam, a benchmark “designed to be the final closed-ended academic benchmark of its kind”.

Grok 4 represents a leap in frontier intelligence, setting new state-of-the-art for closed models on ARC-AGI V2 with 15.9% (nearly double Opus’s ~8.6%, +8pp over previous high). On the agentic Vending-Bench, it dominates with $4694.15 net worth and 4569 units sold (averages across 5 runs), vastly outpacing Claude Opus 4 ($2077.41, 1412 units), humans ($844.05, 344 units), and others. Grok 4 Heavy leads USAMO’25 with 61.9%, and is the first to score 50.7% on Humanity’s Last Exam (text-only subset), demonstrating unparalleled capabilities in complex reasoning through scaled reinforcement learning and native tool use.

LiveCodeBench (Jan – May)

Competitive Coding

USAMO 2025

Olympiad Math Proofs

HMMT 2025

Competitive Math

ARC-AGI v2 Semi Private

Pattern Recognition

Grok 4 API empowers developers with frontier multimodal understanding, a 256k context window, and advanced reasoning capabilities to tackle complex tasks across text and vision. It integrates realtime data search across X, the web, and various news sources via our newly launched live search API, enabling up-to-date, accurate responses powered by native tool use. With enterprise-grade security and compliance—including SOC 2 Type 2, GDPR, and CCPA certifications—the API ensures robust protection for sensitive applications. Grok 4 is coming soon to our hyperscaler partners, making it easier for enterprises to deploy at scale for innovative AI solutions.

Speak with Grok in our upgraded Voice Mode, featuring enhanced realism, responsiveness, and intelligence. We introduce a serene and brand-new voice, redesigning the conversations to make them even more natural.

And now, Grok can see what you see! Point your camera, speak right away, and Grok pulls live insights, analyzing your scene and responding to you in realtime from within the voice chat experience. We are proud to present this model trained in-house, with our state-of-the-art reinforcement learning framework and speech compression techniques.

xAI will continue scaling reinforcement learning to unprecedented levels, building on Grok 4’s advancements to push the boundaries of AI intelligence. We plan to expand the scope from verifiable rewards in controlled domains to tackling complex real-world problems, where models can learn and adapt in dynamic environments. Multimodal capabilities will see ongoing improvements, integrating vision, audio, and beyond for more intuitive interactions. Overall, our focus remains on making models smarter, faster, and more efficient, driving toward systems that truly understand and assist humanity in profound ways.

@@@@@@

We cover here advances in Machine Learning and Large or Small Language Models in Healthcare, Pharmaceutical, Medical and Life Sciences

With the announcement of DeepSeek on January 27, 2025, it became compelling to cover several aspects of this hot Artificial Intelligence Technology.

In the following article published on 1/28/2025 we covered the following four topics:

DeepSeek-V3 and Reasoning Model R1: Four Views (a) Explanations (b) The Chinese Perspective (c) DeepSeek Impact on Demand for Inference Chips & Training Chips, and (d) LPBI Group: Expert Content for ML Models in Healthcare, Pharmaceutical, Medical and Life Sciences

Curator: Aviva Lev-Ari, PhD, RN

See also,

Advanced AI: TRAINING DATA, Sequoia Capital Podcast, 31 episodes

Who shall be consulted?

- Mo Gawdat: AI’s IQ is Doubling Every 5.7 Months—Should We Be Worried?

https://youtu.be/NeVRtDe8EG8?si=CqIinEC4zYA56xaR

- Mo Gawdat – The Future of Al and How It Will Shape Our World | Prof G Conversations

https://www.youtube.com/watch?v=Q6B2ceRNKL8

- Sequoia

a. View From The Top with Roelof Botha, Managing Partner of Sequoia Capital

https://www.youtube.com/watch?v=nQAZ3iV3gSg

b. The AI opportunity: Sequoia Capital’s AI Ascent 2024 opening remarks

Sequoia Capital AI Ascent;

- Sonya Huang

- Pat Grady

- Konstantine Buhler

https://www.youtube.com/watch?v=TDPqt7ONUCY&t=22s

c. Sequoia Capital Podcasts on AI Training Data

31 episodes

https://www.youtube.com/playlist?list=PLOhHNjZItNnMm5tdW61JpnyxeYH5NDDx8

- PureTech, Daphne Zohar

Senior Advisor & Board Observer

Daphne Zohar is the founder and chief executive officer and a member of the Board of Directors at Seaport Therapeutics. Previously, she was the founder, chief executive officer and a Board Member of PureTech Health (Nasdaq: PRTC, LSE: PRTC) where she also co-founded PureTech’s entities, including Karuna Therapeutics (acquired by Bristol Myers Squibb). A successful entrepreneur, Ms. Zohar created PureTech, assembling a leading team to help implement her vision for the company, and was a key driver in fundraising, business development and establishing the underlying programs and platforms that have resulted in PureTech’s productive R & D engine, which led to 28 new medicines being advanced via the company’s Wholly Owned Pipeline and Founded Entities, including two that received both U.S. Food and Drug Administration clearance and European marketing authorization and a third (KarXT) that has been filed for FDA approval. PureTech’s track record of clinical success is approximately 6 times better than the industry average and 80 percent of the clinical studies run by PureTech and its Founded Entities have been successful. Ms. Zohar has been recognized as a top leader and innovator in biotechnology by a number of sources, including EY, Fierce Pharma, BioWorld, MIT Technology Review, The Boston Globe and Scientific American. She serves on the BIO (Biotechnology Innovation Organization) Board Executive Committee as well as the Health Section Committee and is co-chair of the Strategy and Policy Committee of the board. Ms. Zohar is a member of the Duke-Margolis Center Policy Roundtable on the Inflation Reduction Act (IRA) and the Health Affairs IRA Observatory. She is also a co-founder and host of the Biotech Hangout podcast, a weekly discussion of biotech news with a group of industry leaders and experts.

WHERE DOES LPBI Group’s IP Portfolio FITS?

- EXPERT CONTENT for ML Models in the domains of Healthcare, Pharmaceutical, Medical and Life Sciences

9 gigabytes of TRAINING Data:

- Journal articles (+6200) – Text & Images

- 48 e-books – Text & Images

- 100 Conference e-Proceedings and 50 Tweet Collections – Text

- 7500 biological images – prior art – Images

- +300 audio podcasts – Text & Audio

These multimodal repository can be treated as TRAINING DATA for Foundational AI models in Healthcare

- Each category of research in our Journal ontology with +50 articles is usable as input for a Small Language Model (SLM) based on Expert content [N ~ 256]

- Each e-Series of our e-Books Series [Series A, B, C, D, E] is usable as input for Large Language Model (LLM) based on Expert content [N = 18 volumes included ~2,700 curation articles from our Journal]

URLs for the English-language Edition by e-Series:

Series A: Cardiovascular Diseases ($515) – six volumes

https://www.amazon.com/gp/product/B07P981RCS?ref_=dbs_p_mng_rwt_ser_shvlr&storeType=ebooks

Series B: Frontiers in Genomics ($200) – two volumes

https://www.amazon.com/gp/product/B0BSDPG2RX?ref_=dbs_p_pwh_rwt_anx_b_lnk&storeType=ebooks

Series C: Cancer & Oncology ($175) – two volumes

https://www.amazon.com/gp/product/B0BSDWVB3H?ref_=dbs_p_mng_rwt_ser_shvlr&storeType=ebooks

Series D: Immunology ($325) – four volumes

https://www.amazon.com/gp/product/B08VVWTNR4?ref_=dbs_p_pwh_rwt_anx_b_lnk&storeType=ebooks

Series E: Patient-Centered Medicine ($274) – four volumes

https://www.amazon.com/gp/product/B0BSDW2K6C?ref_=dbs_p_mng_rwt_ser_shvlr&storeType=ebooks

Usage of this EXPERT CONTENT as TRAINING DATA will harness the potential of Medical Text Analysis applying AI technologies to uncover critical relations among research vectors in Drug Discovery:

PROMPTS:

- Gene – Disease

- Gene – Drug

- Drug – Disease

2. TRAINING DATA

- AI, Security and the New World Order ft. Palo Alto Networks’s Nikesh Arora

Hosted by: Sonya Huang and Pat Grady, Sequoia Capital

https://youtu.be/rqgCXcd9U1U?si=Mp9HvHPHlFiAzAPK

3. Why LPBI Group is for Microsoft

- Satya Nadella – Microsoft’s AGI Plan & Quantum Breakthrough

https://youtu.be/4GLSzuYXh6w?si=wGozHtqSe848UzZy

- Microsoft Quantum Computer

- Microsoft CTO Kevin Scott on How Far Scaling Laws Will Extend | Training Data

https://www.youtube.com/watch?v=aTQWymHp0n0

4. Future of AI and Data

- Snowflake CEO Sridhar Ramaswamy on the Future of AI and Data

https://youtu.be/YbJoUbMoglI?si=8mGP_enzreZVo8y3

- AWS & LLM – Layers Architecture

Amazon Web Services is a profit-generating powerhouse

Amazon is typically known as the world’s largest e-commerce company, but it also leads the cloud computing industry through its Amazon Web Services (AWS) platform. AWS provides hundreds of services to help businesses transition into the digital age, but it has also become the center of Amazon’s growing portfolio of AI projects.

Management believes every digital application we use in daily life will eventually be infused with AI. AWS wants to be the go-to provider that businesses use to deliver those services, which will involve dominating the three core layers of AI.

Hardware is the bottom layer. AWS operates AI data center infrastructure powered by Nvidia’s industry-leading graphics processing units (GPUs), but it also designed its own chips. That includes a new variant called Trainium2, which can save developers up to 40% on training costs compared to GPUs from suppliers like Nvidia.

Large language models (LLMs) make up the middle layer. The AWS Bedrock platform offers developers access to over 100 ready-made LLMs from third parties like Anthropic and even DeepSeek, helping them accelerate their AI projects. AWS also built a family of models in-house called Nova, which can reduce development costs by up to 75% compared to other LLMs on Bedrock, and major customers like Palantir Technologies are already using them.

Software is the third and final layer. Amazon embedded an AI-powered virtual assistant into AWS called Q, which can help businesses identify trends in their data, write computer code for software projects, and perform other tasks. Amazon used Q internally late last year for a project that saved the company $260 million and an estimated 4,500 developer years, according to CEO Andy Jassy on the fourth-quarter earnings call. Q’s capabilities will expand over time, creating new revenue streams for AWS.

AWS generated $107.5 billion in revenue during 2024. Even though that represented just 16.8% of Amazon’s total revenue of $637.9 billion, it accounted for 58% of the company’s total $68.6 billion in operating income. In other words, the cloud platform is the profitability engine behind Amazon’s empire.

https://finance.yahoo.com/news/prediction-spectacular-stock-join-nvidia-090700612.html

Below we present UPDATES to each of these four Parts and we added new related Parts:

Agents

- Getting the Most From AI With Multiple Custom Agents ft Dust’s Gabriel H…

Hosted by: Konstantine Buhler and Pat Grady, Sequoia Capital

https://youtu.be/nOyOtxTA03A?si=E48TdHvbmb-oSwsJ

- Agents + LLM – Reflection AI’s Misha Laskin on the AlphaGo Moment for LLMs | Training Data

Misha Laskin, former research scientist at DeepMind. Misha is embarking on his vision to build the best agent models by bringing the search capabilities of RL together with LLMs at his new company, Reflection AI

Hosted by: Stephanie Zhan and Sonya Huang, Sequoia Capital

https://youtu.be/pYBOWDJ5HJc?si=D5_6spw2VfvfjweP

- REINFORCING LEARNING AGENT + LLM for AGI —> From AlphaGo to AGI ft ReflectionAI Founder Ioannis Antonoglou

Ioannis Antonoglou, founding engineer at DeepMind and co-founder of ReflectionAI, has seen the triumphs of reinforcement learning firsthand. From AlphaGo to AlphaZero and MuZero, Ioannis has built the most powerful agents in the world

Hosted by: Stephanie Zhan and Sonya Huang, Sequoia Capital

https://youtu.be/6CMCkeSU9FI?si=5l9b0Frl45JPLl_9

- Why Reinforcement Learning is the Future for AI Agents ft OpenAI’s Deep …

OpenAI’s Isa Fulford and Josh Tobin discuss how the company’s newest agent, Deep Research, represents a breakthrough in AI research capabilities by training models end-to-end rather than using hand-coded operational graphs.

Hosted by: Sonya Huang and Lauren Reeder, Sequoia Capital

https://youtu.be/bNEvJYzoa8A?si=gwI0QF5y1RfB6XJ8

- Sierra co-founder Clay Bavor on Making Customer-Facing AI Agents Delightful

Hosted by: Ravi Gupta and Pat Grady, Sequoia Capital

https://youtu.be/RAZFDY_jGio?si=VetEPDLTn_nmWE2F

- Jim Fan on Nvidia’s Embodied AI Lab and Jensen Huang’s Prediction that A…

Hosted by: Stephanie Zhan and Sonya Huang, Sequoia Capital

https://youtu.be/yMGGpMyW_vw?si=V3mqPg0BtK7HlZK7

AGI – Artificial General Intelligence

- AGI – Sam Altman Finally Admits “We’ve Been Doing it Wrong

https://youtu.be/3s-Yd9h4Po0?si=oCtUJ8WyOCG82m5I

generativeAI – Generative Artificial Intelligence

- AI Leaders Reveal the Next Wave of AI Breakthroughs (At FII Miami 2025) …

https://youtu.be/kbG2CWrmKTw?si=Y7KNNgNgIne58tWF

- Google: Developing Google DeepMind’s Thinking Models

Speakers: Jack Rae, Logan Kilpatrick

Products Mentioned: Gemini, Google AI

https://youtu.be/35KhiPRvF88?si=cNkwhOZLytKk_NvI

- OpenAI —> Ep.# 137: GPT-4.5 and GPT-5 Release Dates, Grok 3, Forecasting New Jobs …

AI for Writers Summit. The Summit takes place virtually from 12:00pm – 5:00pm ET on Thursday, March 6, 2025

https://youtu.be/79gA0lX4Xi4?si=p7tSOtuKU5GDJAld

Database Architecture & Applications

- Turning Academic Open Source into Startup Success ft Databricks Founder …

Berkeley professor Ion Stoica, co-founder of Databricks and Anyscale, transformed the open source projects Spark and Ray into successful AI infrastructure companies.

Hosted by: Stephanie Zhan and Sonya Huang, Sequoia Capital

https://youtu.be/S_osYy3tsf8?si=C6001CzWGbHZpcin

- Building the Sales ‘System of Action’ with AI ft Clay’s Kareem Amin

Clay is leveraging AI to help go-to-market teams unleash creativity and be more effective in their work, powering custom workflows for everything from targeted outreach to personalized landing pages.

Hosted by: Alfred Lin, Sequoia Capital

https://youtu.be/K_F0ncVqWIo?si=2psynwY-zVLDM0J2

- Using AI to Build “Self-Driving Money” ft Ramp CEO Eric Glyman

Hosted by: Ravi Gupta and Sonya Huang, Sequoia Capital

https://youtu.be/gFWwB3z8ags?si=K9EBmhSTNtvwPUiI

- Turning Graph AI into ROI ft Kumo’s Hema Raghavan

Hosted by: Konstantine Buhler and Sonya Huang, Sequoia Capital

https://youtu.be/7rrLY1bwQ6g?si=aGLd9J1YHnIKIV4x

Offensive Security benefits from AI

- Cracking the Code on Offensive Security With AI ft XBOW CEO and GitHub C…

- Health outcomes & Biology

- GenerativeAI – DARIO AMODEI at Anthropic

- Company to watch: Harmonic

Sequoia Hosts:

Konstantine Buhler and Sonya Huang

https://youtu.be/9mIphDV9m9c?si=KdO17os0zdI_FmsI

- Why Vlad Tenev and Tudor Achim of Harmonic Think AI Is About to Change M…

About UCLA math department

Programming language called Lean that was gaining traction among mathematicians. The combination of that and the past decade’s rise of autoregressive models capable of fast, flexible learning made them think the time was now and they founded Harmonic. Their mission is both lofty—mathematical superintelligence—and imminently practical, verifying all safety-critical software.

Hosted by: Sonya Huang and Pat Grady, Sequoia Capital

https://youtu.be/NvAxuCIBb-c?si=NBQqTrCRKLhsJ-ku

Part 0: Competitive Scenes

Updated 2/24/2025

- AI’s New World Order: US-China, War, Job Loss | Tyler Cowen

https://youtu.be/t6Je8EKhUyw?si=CdsH4vRYJKzMQIt3

Updated on 2/18/2025

- AGI (gets close), Humans: ‘Who Gets to Own it?’

https://youtu.be/oUtbRMatq7s?si=Jk1He9AoHC7Qufkj

- DeepSeek vs. OpenAI: How the Open-Source Revolution Threatens the Imperi…

https://youtu.be/74VVN9THfU8?si=Hu_lDnBCBt–CUPE

- How DeepSeek built cutting-edge AI without top GPUs

https://youtu.be/9KFEOv2Tcao?si=YwwacMJJujp7mqI5

- How China’s New AI Model DeepSeek Is Threatening U.S. Dominance

https://youtu.be/WEBiebbeNCA?si=4xzKj3Q2WZIWS4_K

- Is NVIDIA Crashing The Market? China’s & GOLD’s RISE | Louis Gave

https://youtu.be/wQiWRiifimE?si=nImuqxzmcvmcJR_B

- Arvin Srinivas, CEO Perplexity – on DeepSeek and Tik Tok

https://youtu.be/EyMNxMzK-ow?si=nBczDCOGcARJ0CB2

- DeepSeek Shut Down! Expert Explains Why & What To Do Now

https://youtu.be/vsgS8cGX4oE?si=9NZtM6JrFRY3hlcq

- Google Gemini AI Hacked: The Scary Truth Revealed: Cyber Security Today,…

https://youtu.be/s44QaYjFOjs?si=ivBE4hX365FAtLp

- AI Stuns the World: DeepSeek Drama, Butcher Bots, OpenAI STARGATE, Singu…

https://youtu.be/K05SJlNRdUg?si=mU0zpIHui1qDY9YX

- DeepSeek vs. Open AI – The State of AI w/ Emad Mostaque & Salim Ismail

https://youtu.be/lY8Ja00PCQM?si=7j2ESdhBCcCGqR0y

- DeepSeek’s Popular AI App Is Explicitly Sending US Data to China | WIRED

https://www.wired.com/story/deepseek-ai-china-privacy-data/

- Groq CEO Jonathan Ross – Tech Giants in the Generative AI Age

https://youtu.be/IbarROtj4lU?si=QcQ6YLO67bqUZJLU

Part A: Explanations

Updated on 2/27/2025

- David Luan: DeepSeek’s Significance, What’s Next for Agents & Lessons fr…

David Luan:

VP of Engineering at OpenAI, a key contributor to Google Brain, co-founder of Adept, and now leading Amazon’s SF AGI Lab.

With your co-hosts:

@jacobeffron

- Partner at Redpoint, Former PM Flatiron Health

@patrickachase

- Partner at Redpoint, Former ML Engineer LinkedIn

@ericabrescia

- Former COO Github, Founder Bitnami (acq’d by VMWare)

@jordan_segall

- Partner at Redpoint

https://youtu.be/AU9Fdgs0ZaI?si=BGFQIk5TOAZ1fhy1

Updated on 2/24/2025

- Deep Dive into LLMs like ChatGPT

https://youtu.be/7xTGNNLPyMI?si=yNwGc3qRCx3SUZ0k

- Deploying DeepSeek V3 on Kubernetes

https://blog.mozilla.ai/deploying-deepseek-v3-on-kubernetes/

- DeepSeek-V3 Explained 1: Multi-head Latent Attention | Towards Data Science

https://towardsdatascience.com/deepseek-v3-explained-1-multi-head-latent-attention-ed6bee2a67c4/

Updated on 2/18/2025

- The AI Coding Agent Revolution, The Future of Software, Techno-Optimism …

https://youtu.be/9xhDL2GbzaU?si=l1_Mww8iwkpUsAJM

- The Aravind Srinivas Interview: How Perplexity Is Revolutionizing The Future Of Search

https://youtu.be/fJLE_gYkvZY?si=jVGlGshODQc_X3LQ

- Dr. Ashish Bamania on Medium

Multi-Head Latent Attention Is The Powerful Engine Behind DeepSeek by Dr. Ashish Bamania

Download Medium on the App Store or Play Store

- CMU’s President’s Lecture Series: Sundar Pichai

https://youtu.be/rA9ysJpeD-c?si=8n3UQL2BXfEnJ6mi

- CITATION FOR SEARCH RESULTS —> Aravind Srinivas: Perplexity CEO on Future of AI, Search & the Internet …

https://youtu.be/e-gwvmhyU7A?si=5C2C45NeA1EHRUva

- Making AI accessible with Andrej Karpathy and Stephanie Zhan

https://youtu.be/c3b-JASoPi0?si=xxnGpoQhgcLUfOpB

- Dario Amodei – CEO of Anthropic | Podcast | In Good Company | Norges Ban…

https://youtu.be/xm6jNMSFT7g?si=l8E3LIhwvxFJf5fo

- Massive AI News : MAJOR AI Breakthrough, New AI Agent, Gemini 2, Claude …

https://youtu.be/N8eC4zoeOfk?si=7JthdCfI50RlXs_q

- The Dawn of Artificial General Intelligence?

https://youtu.be/a-8u-ZO-svQ?si=9DcPjLe_d65hwZuP

- EP93: GPT-5, Grok 3 & Claude 4? Plus AI Agents Economic Impact & Inevita…

https://youtu.be/dKEktwdJfpo?si=TmVHCPrOdjLRsSF2

Updated on 2/11/2025

- Deep Dive into LLMs like ChatGPT

https://youtu.be/7xTGNNLPyMI?si=4Q82kZhoaECpk6ta

Updated on 2/1/2025

- DeepSeek Just CRUSHED Big Tech AGAIN With JANUS PRO – New SHOCKING AI Model!

DeepSeek has released Janus Pro, a groundbreaking multimodal AI model that challenges industry giants like OpenAI and Nvidia with its efficiency and open-source accessibility. Janus Pro delivers strong performance in image generation and analysis, while its development cost of under six million dollars raises questions about the high spending of Silicon Valley AI labs. This disruptive innovation has sparked global interest, impacted tech markets, and highlighted DeepSeek as a serious contender in the AI race. Key Topics:

- DeepSeek’s Janus Pro AI model that challenges OpenAI’s DALL-E 3 and GPT-4

- How Janus Pro outperforms big tech models on benchmarks like GenEval and DPG-Bench

- The innovative unified transformer architecture enabling multimodal tasks and open-source access

https://youtu.be/pN17MOfhZJk?si=lo6LFNQOyK1MPQUf

- WATCH technical explanation of the Breakthroughs in DeepSeek-V3 and Reasoning Model R1

https://youtu.be/8v2l6SJECW4?si=S6q06wYpnGaM3XDl

- Navigating a world in transition: Dario Amodei, CEo Antropic in conversation with Zanny Minton Beddoes, Editor-in-Chief, Economist, this session took place alongside the World Economic Forum Annual Meeting 2025.

https://youtu.be/uvMolVW_2v0?si=HFUZUCwvcRonJd-X

- Anthropic CEO Reveals New Details About DeepSeek R1

https://youtu.be/i-OAzG2mCUE?si=PFMPA6hXbK1MEljP

- DeepSeek R1 – Everything you need to know

Interviewee:

Ray Fernando, a former Apple engineer, gives an in-depth tutorial on DeepSeek AI and local model implementation. The conversation includes detailed guide for setting up local AI environments using Docker and OpenWebUI, and how to implement DeepSeek on mobile using the Apollo app. Key Points: • Comprehensive overview of DeepSeek AI and its reasoning capabilities • Discussion of data privacy concerns when using Chinese-hosted models • Detailed tutorial on running AI models locally using Docker and OpenWebUI • Comparison of different AI model providers (Fireworks, Grok, OpenRouter) • Mobile implementation of AI models using Apollo app

https://youtu.be/i9kTrcf-gDQ?si=3ohV9qeuphwAtPrX

Part B: The Chinese Perspective

Updated on 2/3/2025

- DeepSeek AI: What It Means and What Happens Next

https://youtu.be/EAgdQKPGuU0?si=42XvKbwxvGmHq7F8

Updated on 2/3/2025

- The Big Tech Show: What is DeepSeek AI? The Chinese company shaking up t…

https://youtu.be/IkWTCk5el-w?si=_xtH14zyzpdhLlZn

Updated on 2/3/2025

- DeepSeek CEO Exposed: The Man Disrupting AI?

https://youtu.be/CMQmL2igg9o?si=ljbOeBJJIVjDkwIp

Updated on 2/1/2025

- Is China’s DeepSeek the HOLY GRAIL of AI?

https://youtu.be/p6YmQNSfmfE?si=6CWLlGYT3skRXfMH

Part C: DeepSeek potential Impact on Demand for Inference Chips & Training Chips, and

Updated on 2/11/2025

- CHIPS —>>> DeepSeek Isn’t Terrible: It’s a Sign of What’s Coming Next

https://youtu.be/LJYRpr554EA?si=-m4dD8u8AwKfOPd_

- Elon Musk on Deepseek – YouTube

https://www.youtube.com/results?search_query=Elon+Musk+on+Deepseek

Updated on 2/3/2025

- DeepSeek – How a Chinese AI Startup Shook Silicon Valley

https://youtu.be/xUzzWUlSk98?si=k5j84g383aUPEwtl

- DeepSeek, China, OpenAI, NVIDIA, xAI, TSMC, Stargate, and AI Megacluster…

https://youtu.be/_1f-o0nqpEI?si=z0A8eV963xtr4DZ3

Updated on 12/1/2025

Part D: LPBI Group: Expert Content for ML Models in Healthcare, Pharmaceutical, Medical and Life Sciences

Updated on 2/1/2025

Dr. Williams please create a table of research categories with +50 articles

Part E: Latest from Google’s DeepMind on AlphaFold

Updated on 2/1/2025

Nobel Prize in Chemistry 2024 to David Baker, Demis Hassabis and John M. Jumper